New Web Order

The World Wide Web is constantly undergoing a restless campaign of enshittification; worse design, worse maintenance, worse technical implementations, etc. Web pages, for example, were designed to be 85% of content worth reading, 10% HTML and 5% CSS. Nowadays, we get:

- 50% of ads, trackers, analytics, password sniffers, captchas, cryptominers, CDNs, MitM attacks (such as Cloudflare's DDoS protection), fingerprinting, social media buttons, NFTs, and video embeds.

- 40% of unnecessary bloat such as CSS frameworks, JavaScript frameworks, and non-free JavaScript code as well as JavaScript itself.

- 9% of propaganda and slop.

- 1% of actual content we want to see.

Of course, these issues are only content-wise. If we come to analyze the core technology that powers the modern web, we quickly realize that the "clearnet" is built on a fundamentally centralized infrastructure; at its core, HTTP (Hypertext Transfer Protocol) is the backbone of the web, and it relies on a hierarchical and bureaucratic system that enforces control at multiple levels, such as:

- Server-dependent model; where all communication is funneled through intermediaries rather than direct peer-to-peer connections, giving a handful of entities the unchecked power to monitor, restrict, and terminate access.

- DNS; where instead of allowing direct peer-to-peer connections, the web forces users to go through a centralized naming system controlled by ICANN and a handful of registrars.

- Domain registrars; where owning a domain is essentially a rental system where corporations dictate terms, suspend domains, or deplatform users at will.

- SSL certificates and their CAs; where secure communication (HTTPS) is only possible through an authoritative system where mostly government-backed and corporate entities decide who gets to have a "trusted" certificate to create a gatekeeping mechanism.

- Cloud hosting and CDN monopolies; where almost everything is largely powered by a few corporations (Amazon AWS, Google Cloud, Microsoft Azure, and Cloudflare), meaning that a significant portion of the internet can be censored or deplatformed instantly if the aforementioned entities decide so.

- IP addresses and ISPs; where a handful of providers control internet access, allowing them to throttle, censor, or monitor users' traffic in collaboration with governments and corporations.

- Web client monopolization; where Google's Chromium and Mozilla's Firefox dominate the browser market, shaping web standards and phasing out features that don't align with corporate interests.

- Web standard dictatorship; where W3C and WHATWG, among others, decide how the web evolves, adding and subtracting features based on corporate interests rather than user freedom.

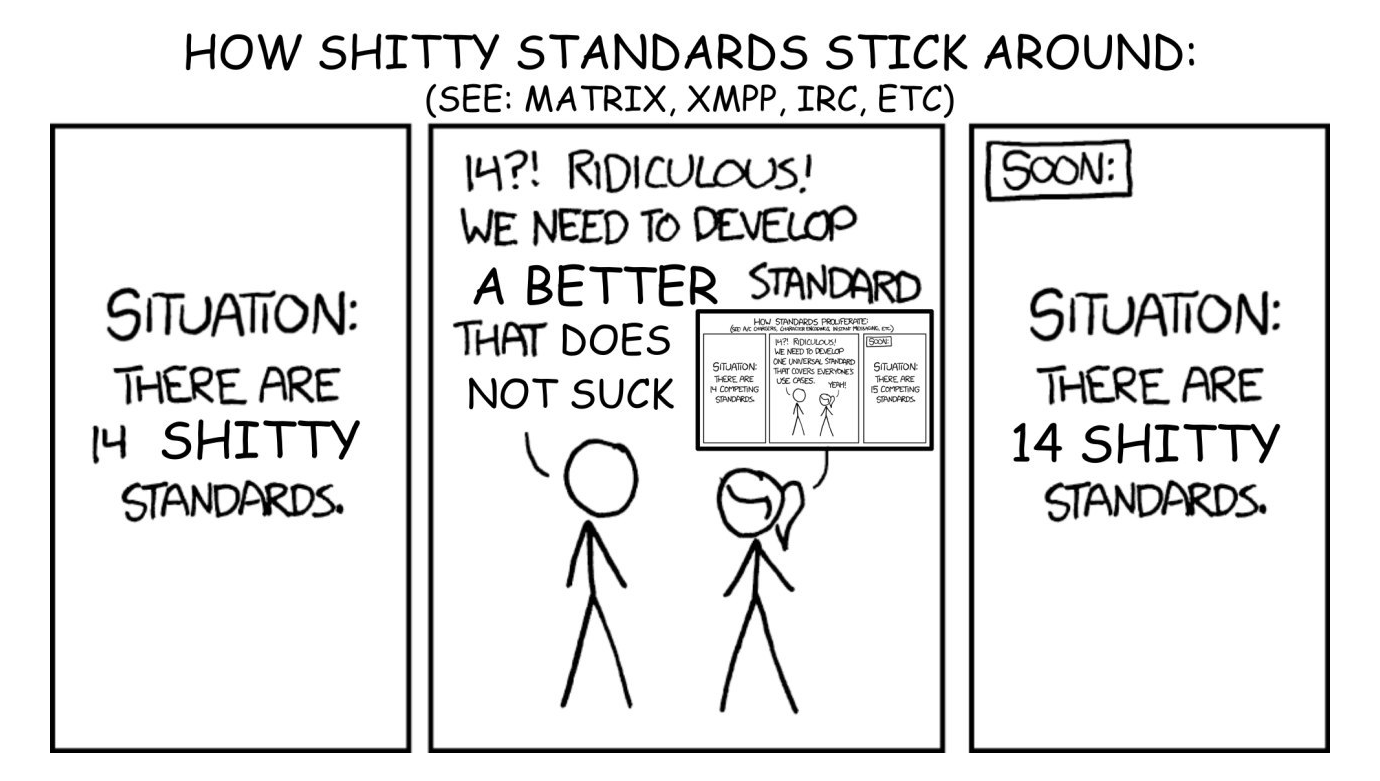

Despite the many occasional attempts at concocting decentralized protocols, the vast majority of internet activity still occurs within this centralized framework, and most of the proposed solutions fail due to both user adoption issues and fundamental design flaws. This article will provide a reimagination of the most important aspects of the web as an attempt to make a roadmap towards a seemingly perfect solution, rather than ignoring the fact that shitty technology exists and doing nothing about it in the name of the proliferating "competing standards."

Inappropriate application of xkcd #927.

Solution ~

The web has two core technical problems:

- The Protocol (i.e., the clearnet)

- The Clients (i.e., web browsers)

1. The Protocol ~

As highlighted above, multiple decentralized protocols have already been made to reinvent the wheel. Currently, all of them have huge defects, but the closest protocol representing how things must work on the web is a blend of I2P and BitTorrent, closely similar to Hyphanet.

The protocol that I have in mind doesn't work with websites, but only for data transmission. There's no fixed way to display content (like web browsers do with HTML), and instead, different clients interpret the data they receive however they choose appropriately. One client might show a chat, another a video, or another a forum post, as will be seen below, keeping things flexible and decentralized.

For transmission, the protocol serves to be an anonymizing overlay network whose data is encrypted and transmitted from one peer to another through a dynamic and smartly randomized multitude of volunteer-maintained nodes, which are determined through their historical performances and thus given different priorities based on efficiency, balancing both anonymity and speed in the network.

In addition, to get rid of monopolization (such as the one on the clearnet regarding site domain names), discoverablity of content on the protocol would be decentralized; every piece of data is identified by a random cryptographic hash. Discoverability in the protocol is up to the people willing to share hashes, through manual exchange, search engines, forums, trackers, etc., and it's not managed by the protocol itself.

Moreover, the proposed protocol relies on decentralized (i.e., peer-to-peer) data distribution, where any device with access to specific data can help distribute it if both the sender(s) and recipient are online, similar to how torrenting works. Plus, to improve availability, peers should be able to designate and manage external servers, both centralized or decentralized, to host data 24/7.

2. The Clients ~

Instead of a "web browser" through which everything on the web can be accessed directly, different programs (or clients) should present different aspects of data on the web, each client with its own unique implementations:

- Personal websites.

- Text chatting.

- Voice chatting.

- Streaming.

- File sharing.

- Content tracking.

- Online gaming.

Of course, I reserve the right to extend my hate on bloatware here (see Things I Dislike > Bloatware).